Have no fear of moving into the unknown.

Let’s start with the main spoiler: we sped up the app loading time by ~80 times. We achieved this through a comprehensive migration from Firebase Realtime Database to PostgreSQL, transitioning from a serverless architecture to a client-server application.

The user’s initial loading time ranged from 1 to 15 minutes. Screens would freeze, the app would glitch, and sometimes even crash. It wasn’t due to the lack of power in older smartphones but rather the interaction with data and how it was stored. Long loading times were observed even on an iPhone 14 Pro.

In the real world, trends dictate the use of technologies, and their application often extends beyond their intended purpose. We encountered an app built on the wave of popularity of NoSQL databases and cloud services. Therefore, an important part of the research was identifying performance bottlenecks, considering the set of technologies, and understanding the pitfalls.

We took on an application that had been running for a long time. Hence, the main requirement put forth by the client was that our changes should not hinder the addition of new solutions, the improvement of existing functionality, or the use of the application.

Exploring the issue

Initial data

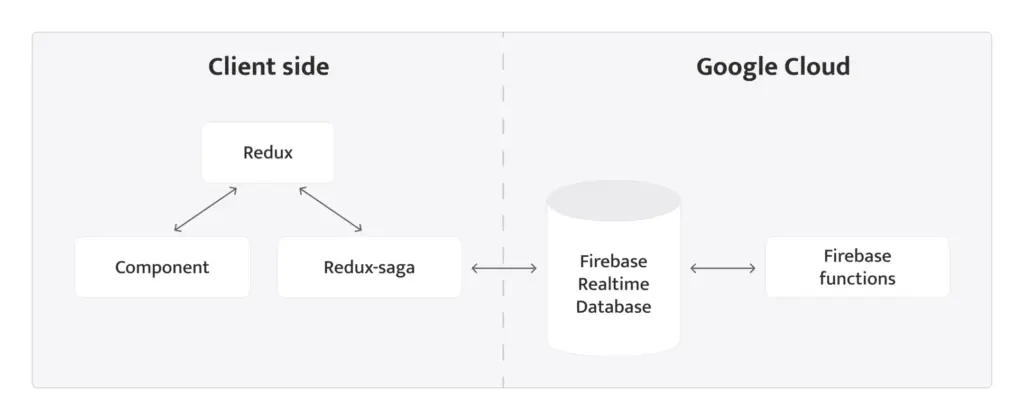

So, the application is written in React Native, Expo. User data is stored in the Firebase Realtime Database. All interactions with the database are handled in Redux Saga, and the retrieved data are subsequently placed in the Redux store.

Firebase Cloud Functions also interact with the data, reacting to changes in the database and performing useful activities: sending emails, creating invoices for payment, and sending notifications. Additionally, Cloud Functions can update the database. And changes in

the database can trigger other Cloud Functions, leading to a stream of uncontrolled changes.

- Firebase Realtime Database is a cloud database. Data is stored in JSON format and synchronized in real-time with all connected clients.

- Firebase Cloud Functions is a serverless platform that allows automatically running server-side code in response to events triggered by background events, HTTPS requests, Admin SDK, or Cloud Scheduler tasks.

- Redux is a state manager for JS applications.

- Redux-saga is a Redux side effect manager.

A poorly designed database can negatively affect the performance and reliability of the application. Choosing between NoSQL and SQL databases incorrectly can lead to performance, flexibility, and security issues for the application, which can have a negative impact on the business. Therefore, it is very important to entrust the selection and design of the database to specialists who have extensive experience in this field.

After analyzing the business logic of the application and delving into the code, we noted:

- multiple relationships between entities;

- data filtering that does not correspond to the capabilities of the selected database;

- an attempt to mimic SQL relationships in the database, resulting in hundreds of unnecessary queries;

- poorly structured entities.

All this raises doubts about the use of the Realtime Database, the functionality of which is significantly limited compared to SQL databases. The drawbacks of real-time database could have been overcome at the design stage by correctly defining indexing, optimizing structures, and restricting certain functionalities.

Custom access rules for the Firebase Realtime Database allowed us to prevent users from acting on behalf of others, accessing other people’s data, etc. The Firebase tool for permissions management is very powerful in skilled hands, but in this application, these restrictions prevented data from being obtained in array form.

As a result, several limitations overlapped: the incorrectly chosen database, poorly designed structure, and well-functioning access rules. This led to cascading database queries that could grow at least quadratically depending on the amount of data.

Let’s break it down with an example:

The user entity stores an array of chat IDs. Each chat has an array of message IDs and participant IDs. In the background, all chats are loaded one by one for the user. After loading each chat, messages and information about the interlocutor are loaded for each chat. These operations are necessary due to the requirement to sort chats by the time of the last message and display the actual name of the interlocutor in the chat header.

type User = {

chatIds: Record<string, true>;

};

type Chat = {

messageIds: Record<string, true>;

memberIds: Record<string, true>;

};

type Message = {

content: string;

createdAt: number;

userId: string;

};

type Database = {

users: Record<string, User>;

chats: Record<string, Chat>;

messages: Record<string, Message>;

};Code language: HTML, XML (xml)Due to the poorly designed database, almost all filtering and sorting were implemented on the client side. To support all relationships in NoSQL, most entities were significantly inflated, increasing the load on the application.

These mentioned issues became a performance bottleneck and heavily depended on the amount of data related to the user, especially noticeable for old users.

Migration planning

We wanted to reduce cascading queries. However, after studying the capabilities of the Firebase Realtime Database, we realized that the business requirements cannot be fully satisfied with it. Therefore, we decided on a radical step: migrating from Firebase to PostgreSQL.

We planned to migrate to new technology in 3 stages to avoid disrupting either the application performance or the development of new features.

Stage 1. Proxy Server

At this stage, we create our server, which will act as an intermediary between the application and the database. In the application, we replace requests to the Firebase Realtime Database with requests to our server. We also transfer the logic of Firebase Functions to the server.

Stage 2. Logic and Query Optimization

After creating the proxy server, the performance issue will still exist. Because we haven’t changed the way we work with data in the previous stage. This stage is aimed at significantly speeding up applications by utilizing the resources and capabilities of the server: from caching to implementing our own filtering and sorting.

Stage 3. Migrating from Firebase to PostgreSQL

At this stage, we need to design the database, replace all requests on the server to the Realtime Database with requests to PostgreSQL, and migrate the data from the previous database.

The previous stages can be completed incrementally, allowing us to work on new features and bug fixes in parallel with the migration. But this stage needs to be done in one step, as breaking complex relationships between tables and migrating them step by step is a very laborious step that can take too long, and is unreasonable expensive.

Since all the logic is on the server side and users already feel a significant improvement in performance, this stage can be considered the least priority.

Technology Stack We Decided On:

- NestJS

- GraphQL

- PostgreSQL

- TypeORM

Initial setup

First, we created a NestJS server using the Nest CLI, cleaned up unnecessary code, configured the settings, and set up GraphQL. Then we decided to standardize the GraphQL context for convenient usage.

Context as a puzzle piece

To create a context accessible in any resolver, we could have abstracted it into a separate module. This module would aggregate other modules that want to extend the context. However, we decided to try a different approach: each module is responsible for connecting the part

of the context that belongs to it.

Thus, our context manager had a logic that did not need to be updated all the time. Extending the context was done exclusively at the module level.

We created a service that provides the resulting context by combining the contexts created by other services.

import { Injectable } from "@nestjs/common";

import { GraphQlContextProviderService } from "./interface";

import { AppGraphQlContext, GraphQlBaseContext } from "$/app.types";

@Injectable()

export class GraphQlContextManagerService {

constructor(private contextProviders: GraphQlContextProviderService[]) {}

async createContext(ctx: GraphQlBaseContext): Promise<AppGraphQlContext> {

return this.contextProviders.reduce(

async (contextualPromise, contextProvider) => ({

...(await contextualPromise),

...(await contextProvider?.createContext(ctx)),

}),

Promise.resolve({})

) as Promise<AppGraphQlContext>;

}

}Code language: JavaScript (javascript)We created a module that will act as an aggregator of all context providers and the point of their injection into the GraphQLContextManagerService.

import { DynamicModule, Module, ModuleMetadata } from "@nestjs/common";

import { ParentType } from "../common/types";

import { GraphQlContextProviderService } from "./interface";

import { GraphQlContextManagerService } from "./service";

import { ContextRegistrationOptions } from "./types";

@Module({})

export class GraphQlContextManagerModule {

private static imports: Required<ModuleMetadata>["imports"] = [];

private static contexts: ParentType<GraphQlContextProviderService>[] = [];

private static providers: Provider[] = [

{

provide: GraphQlContextManagerService,

inject: GraphQlContextManagerModule.contexts,

useFactory: (...contextProviders: GraphQlContextProviderService[]) => {

return new GraphQlContextManagerService(contextProviders);

},

},

];

static forFeature(options: ContextRegistrationOptions): DynamicModule {

const { contexts, imports = [] } = options;

GraphQlContextManagerModule.contexts.push(...contexts);

GraphQlContextManagerModule.providers.push(...contexts);

GraphQlContextManagerModule.imports.push(...imports);

return {

module: GraphQlContextManagerModule,

imports,

providers: contexts,

exports: contexts,

};

}

static forRoot(): DynamicModule {

return {

module: GraphQlContextManagerModule,

imports: GraphQlContextManagerModule.imports,

providers: GraphQlContextManagerModule.providers,

exports: [GraphQlContextManagerService],

};

}

}Code language: JavaScript (javascript)Then we connect it to GraphQL.

import { ApolloDriver, ApolloDriverConfig } from "@nestjs/apollo";

import { Module } from "@nestjs/common";

import { ConfigModule } from "@nestjs/config";

import { GraphQLModule as NestJSGraphQLModule } from "@nestjs/graphql";

import { ScheduleModule } from "@nestjs/schedule";

import { GraphQlBaseContext, GraphQlConnectionParams } from "$/app.types";

import { CONFIG_PROVIDER, ConfigType } from "$/config";

import { GraphQlContextManagerModule } from "../graphql-context-provider/module";

import { GraphQlContextManagerService } from "../graphql-context-provider/service";

@Module({

imports: [

NestJSGraphQLModule.forRootAsync({

driver: ApolloDriver,

imports: [GraphQlContextManagerModule.forRoot()],

inject: [CONFIG_PROVIDER, GraphQlContextManagerService],

useFactory: (

config: ConfigType,

graphQLManagerService: GraphQlContextManagerService

): ApolloDriverConfig => ({

autoSchemaFile: true,

subscriptions: {

"graphql-ws": true,

"subscriptions-transport-ws": {

onConnect: (connectionParams: GraphQlConnectionParams) =>

graphQLManagerService.createContext({ connectionParams }),

},

},

playground: config.app.isDevelopment,

context: async (ctx: GraphQlBaseContext) =>

graphQLManagerService.createContext(ctx),

}),

}),

],

})

export class GraphQLModule {}Code language: JavaScript (javascript)Now we can extend the context from the respective module. We will demonstrate this with the example of the Common module:

import { Injectable } from "@nestjs/common";

import { GraphQlContextProviderService } from "../graphql-context-manager/interface";

import { AppGraphQlContext, GraphQlBaseContext } from "$/app.types";

@Injectable()

export class CommonContext implements GraphQlContextProviderService {

async createContext(

baseContext: GraphQlBaseContext

): Promise<Partial<AppGraphQlContext>> {

const { req, res, connectionParams } = baseContext;

return { req, res, connectionParams };

}

}Code language: JavaScript (javascript)Connecting the context to the module:

import { Module } from "@nestjs/common";

import { GraphQlContextManagerModule } from "../graphql-context-manager/module";

import { CommonContext } from "./context";

@Module({

imports: [

GraphQlContextManagerModule.forFeature({

contexts: [CommonContext],

}),

],

})

export class CommonModule {}Code language: JavaScript (javascript)Thus, we created a context manager and standardized the approach to extending the context, which is much more convenient than transforming one aggregator module into a repository of services and modules.

Firebase authentication

One of the core components of almost any application is authentication. In our application, we had two types of users: admins and regular users. Admins are privileged users with special status and maximum access level. Therefore, we needed to secure the routes.

Firstly, we determined that we would use token-based authentication; the token would be passed in the headers for HTTP requests or in the connection parameters for web sockets in the authorization field. For validation, we used the Admin SDK.

We also agreed that identity would represent which user is making the request to the server and would be stored in the GraphQL context.

export type AppGraphQlContext = {

identity: Identity | null;

// ...

};Code language: JavaScript (javascript)Using identity from the context, we were able to protect routes using NestJS guards:

- The

AuthGuardprotects routes from unauthorized users:

import { CanActivate, ExecutionContext, Injectable } from "@nestjs/common";

import { GqlExecutionContext } from "@nestjs/graphql";

import { AppGraphQlContext } from "$/app.types";

import { GuardBoolean } from "$modules/common/types";

@Injectable()

export class AuthGuard implements CanActivate {

canActivate(context: ExecutionContext): GuardBoolean {

const graphQlExecutionContext = GqlExecutionContext.create(context);

const { identity } =

graphQlExecutionContext.getContext<AppGraphQlContext>();

return Boolean(identity);

}

}Code language: JavaScript (javascript)- The

AdminGuardprotects routes from users who are not admins:

import { CanActivate, ExecutionContext, Injectable } from "@nestjs/common";

import { GqlExecutionContext } from "@nestjs/graphql";

import { Scope } from "../constants";

import { AppGraphQlContext } from "$/app.types";

import { GuardBoolean } from "$modules/common/types";

@Injectable()

export class AdminGuard implements CanActivate {

canActivate(executionContext: ExecutionContext): GuardBoolean {

const graphQlExecutionContext =

GqlExecutionContext.create(executionContext);

const { identity } =

graphQlExecutionContext.getContext<AppGraphQlContext>();

return identity?.scope === Scope.ADMIN;

}

}Code language: JavaScript (javascript)Modular subscriptions

Following the analogy of the graphql-context-manager, we also created a subscription-manager, which allows encapsulating subscriptions within the module itself.

We created an interface for subscription creation and basic types:

export interface SubscriptionService {

subscribe(): Promise<Unsubscribe> | Unsubscribe;

isDisabled?: () => boolean;

}Code language: JavaScript (javascript)import { ModuleMetadata } from "@nestjs/common";

import { ParentType } from "../common/types";

import { SubscriptionService } from "./interface";

export type SubscriptionRegistrationOptions = {

subscriptions: ParentType<SubscriptionService>[];

imports?: ModuleMetadata["imports"];

};

export type Unsubscribe = () => Promise<void> | void;Code language: JavaScript (javascript)We developed a service responsible for creating subscriptions, following the same subscription interface:

import { Inject, Injectable } from "@nestjs/common";

import { SUBSCRIPTIONS_PROVIDER } from "./constants";

import { SubscriptionService } from "./interface";

@Injectable()

export class SubscriptionManagerService implements SubscriptionService {

constructor(

@Inject(SUBSCRIPTIONS_PROVIDER)

private subscriptions: SubscriptionService[]

) {}

async subscribe() {

const unsubscribes = await Promise.all(

this.subscriptions.map(async (subscriber) => subscriber.subscribe())

);

return async () => {

await Promise.all(unsubscribes.map((unsubscribe) => unsubscribe()));

};

}

}Code language: JavaScript (javascript)In the module, we implemented logic for filtering and aggregating subscriptions:

import {

DynamicModule,

Module,

ModuleMetadata,

Provider,

} from "@nestjs/common";

import { ParentType } from "../common/types";

import { SUBSCRIPTIONS_PROVIDER } from "./constants";

import { SubscriptionManagerService } from "./service";

import { SubscriptionRegistrationOptions, SubscriptionService } from "./types";

@Module({})

export class SubscriptionManagerModule {

private static imports: Required<ModuleMetadata>["imports"] = [];

private static subscriptions: ParentType<SubscriptionService>[] = [];

private static providers: Provider[] = [

SubscriptionManagerService,

{

provide: SUBSCRIPTIONS_PROVIDER,

useFactory: (...subscriptions: SubscriptionService[]) =>

subscriptions.filter((subscription) => !subscription.isDisabled?.()),

inject: SubscriptionManagerModule.subscriptions,

},

];

static forFeature(options: SubscriptionRegistrationOptions): DynamicModule {

const { subscriptions, imports = [] } = options;

SubscriptionManagerModule.subscriptions.push(...subscriptions);

SubscriptionManagerModule.providers.push(...subscriptions);

SubscriptionManagerModule.imports.push(...imports);

return {

module: SubscriptionManagerModule,

imports,

providers: subscriptions,

exports: subscriptions,

};

}

static forRoot(): DynamicModule {

return {

module: SubscriptionManagerModule,

imports: SubscriptionManagerModule.imports,

providers: SubscriptionManagerModule.providers,

exports: [SubscriptionManagerService],

};

}

}Code language: JavaScript (javascript)Then we created a module that sets up subscriptions when the application starts and unsubscribes before it closes:

import { Module } from "@nestjs/common";

import { SubscriptionManagerModule } from "../subscription-manager/module";

import { SubscriptionManagerService } from "../subscription-manager/service";

@Module({

imports: [SubscriptionManagerModule.forRoot()],

})

export class SubscriberModule {

private unsubscribe: () => Promise<void>;

constructor(private subscriptionManagerService: SubscriptionManagerService) {}

async onApplicationBootstrap() {

this.unsubscribe = await this.subscriptionManagerService.subscribe();

}

async beforeApplicationShutdown() {

await this.unsubscribe();

}

}Code language: JavaScript (javascript)Apollo Client in Sagas

Next, we proceeded to set up Apollo Client in the application to

interact with the server.

We added the GraphQL endpoint API_URL to the environment

variables, which was then used to create an Apollo Link.

We created an Apollo instance with a network-only fetch

policy to keep data interaction immutable and predictable, although we

planned to configure cache-based data interaction flow in the future:

import { ApolloClient, InMemoryCache } from "@apollo/client";

import link from "./link";

const apolloClientInstance = new ApolloClient({

link,

defaultOptions: {

query: {

errorPolicy: "all",

fetchPolicy: "network-only",

},

mutate: {

errorPolicy: "all",

fetchPolicy: "network-only",

},

},

cache: new InMemoryCache(),

});

export default apolloClientInstance;Code language: JavaScript (javascript)To make authentication work, we added the Firebase authentication token to the authorization header for HTTP requests and the same field in the connection params for subscriptions. We

obtained the authentication token using the utility:

import auth from "firebase/auth";

export const getAuthorizationToken = async () => {

const token = await auth.currentUser?.getIdToken();

return token ? `Bearer ${token}` : "";

};Code language: JavaScript (javascript)To interact GraphQL with Redux Saga, we created 3 helpers:

import {

ApolloClient,

ApolloQueryResult,

OperationVariables,

QueryOptions,

} from "@apollo/client";

import { call } from "redux-saga/effects";

import apolloClient from "../instance";

/**

* Use apolloQuery with yield*

*

* @example

* const { data } = yield* apolloQuery<Data, Variables>({ query, variables })

* */

export function* apolloQuery<

Data = unknown,

Variables extends OperationVariables = OperationVariables

>(queryOptions: QueryOptions<Variables, Data>) {

const result: ApolloQueryResult<Data | null> = yield call(

apolloClient.query,

queryOptions

);

return result;

}Code language: JavaScript (javascript)We made the apolloMutation helper similar to apolloQuery. And for the subscription helper, we used eventChannel:

import {

ApolloClient,

FetchResult,

OperationVariables,

SubscriptionOptions,

} from "@apollo/client";

import { END, eventChannel } from "redux-saga";

import apolloClient from "../instance";

/**

* Use apolloSubscription with yield*

*

* @example

* const channel = yield* apolloSubscription<Data, Variables>({ query, variables })

* */

export function* apolloSubscription<

Data = unknown,

Variables extends OperationVariables = OperationVariables

>(subscriptionOptions: SubscriptionOptions<Variables, Data>) {

const apolloClient: ApolloClient<unknown> = yield select(selectApolloClient);

const channel = eventChannel<FetchResult<Data>>((emit) => {

const observable = apolloClient.subscribe(subscriptionOptions);

const subscription = observable.subscribe({

next: emit,

complete: () => emit(END),

});

return () => subscription.unsubscribe();

});

return channel;

}Code language: JavaScript (javascript)Components of server-proxy migration

After the initial setup of the server and client, we proceeded directly to the migration.

Read-write operations

Let’s illustrate the migration of queries and mutations using the example of the user update mutation.

function* updateUser() {

yield takeEvery(’UPDATE_USER’, function* ({ user }) {

const { uid } = yield select(state => state.auth.user)

yield firebase

.database()

.ref(’users’)

.child(uid)

.update(user)

})

}Code language: JavaScript (javascript)The updateUser saga subscribed to the UPDATE_USER event type. The listener accepted an event with new data that would be written to the active user by ID.

To migrate this functionality, we started with the description of UpdateUserInput for GraphQL:

import { Field, ObjectType } from "@nestjs/graphql";

@InputType()

export class UpdateUserInput {

@Field(() => String, { nullable: true })

name?: string;

@Field(() => String, { nullable: true })

email?: string;

}Code language: JavaScript (javascript)Then we added the updateUser method to UserService:

@Injectable()

export class UserService {

async updateUser(id: string, input: UpdateUserInput): Promise<UserObject> {

await this.firebaseService

.getApp()

.database()

.ref(’users’)

.child(id)

.update(input)

return this.getUser()

}

}Code language: JavaScript (javascript)Created the updateUser resolver:

@Resolver()

export class UserResolver

@Mutation(() => UserObject)

@UseGuards(AuthGuard)

async updateUser(

@AuthIdentity() identity: Identity,

@Args('input') input: UpdateUserInput

): Promise<UserObject | null> {

return this.userService.updateUser(identity.id, input);

}

}Code language: JavaScript (javascript)Created an API helper on the client side:

import { FetchPolicy, gql } from "@apollo/client";

import { apolloQuery } from "modules/ApolloClient/utils/saga";

import { User } from "../../types";

type UpdateUserInput = {

email?: string;

name?: string;

};

type Data = {

updateUser: User;

};

type Variables = {

input: UpdateUserInput;

};

const MUTATION = gql`

mutation UpdateUser($input: UpdateUserInput!) {

updateUser(input: $input) {

id

name

email

}

}

`;

export function* updateUserSaga(input: UpdateUserInput) {

const { data, error } = yield* apolloQuery<Data, Variables>({

query: MUTATION,

variables: {

input,

},

});

return {

updateUserData: data?.updateUser,

updateUserError: error,

};

}Code language: JavaScript (javascript)There’s a question, why do we break the rule of using Saga in places with yield*? This way, we allowed TS to understand the type returned by the generators automatically. Here’s an example:

function* randomSaga() {

return Math.random();

}

export function* test() {

// const value: number

const value = yield* randomSaga();

return value;

}Code language: JavaScript (javascript)We did this with an understanding of the potential testing issues that may arise, but it greatly simplifies writing GraphQL-related code in sagas due to the large number of types that may be needed from ApolloClient.

Next, we replaced the request from the Firebase Realtime Database with our API in the saga:

function* updateUser() {

yield takeEvery(’UPDATE_USER’, function* ({ user }) {

yield* updateUserSaga(user)

})

}Code language: JavaScript (javascript)Realtime subscriptions — a pain in the neck

To create a subscription in sagas, we need a channel to deliver data using the take effect. This was standardized using getEventChannelForFirebaseRef:

import { eventChannel } from "redux-saga";

import firebase from "firebase/compat/app";

export const getEventChannelForFirebaseRef = (

ref: firebase.database.Reference,

type: firebase.database.EventType

) => {

const channel = eventChannel((emit) => {

ref.on(type, (snapshot) => {

emit({

values: snapshot.val(),

key: snapshot.key,

});

});

return () => {

ref.off(type);

};

});

return channel;

};Code language: JavaScript (javascript)All subscriptions to updates (value, child_added, child_updated, child_removed) were created using this helper. It works as follows:

- Create a reference to the JSON branch on which we want to create a subscription.

- Subscribe to updates using a channel that triggers a new iteration in the

whileloop when a Firebase event is triggered on the JSON branch. - Create a Redux action to update the store or trigger other sagas.

Let’s illustrate this with an example of migrating the child_added subscription to news.

funciton* subscribeToNewsAdded() {

yield takeEvery(’USER_LOGGED_IN’, function* () {

const ref = yield firebase

.database()

.ref('news')

const channel = yield getEventChannelForFirebaseRef(ref, 'child_added')

while (true) {

const { values } = yield take(channel)

yield put({

type: 'NEWS_ADDED',

news: values

})

}

})

}Code language: JavaScript (javascript)On the server, we created NewsObject .

@ObjectType()

export class NewsObject {

@Field(() => String)

id: string;

@Field(() => String)

content: string;

}Code language: JavaScript (javascript)Then we created a helper for subscriptions.

import { EventType, Query, Snapshot } from 'firebase-admin/database';

export const subscribeToFirebaseEvent = (

ref: Query,

eventType: Event,

eventHandler: (snapshot: Snapshot) => void;

): (() => void) => {

ref.on(eventType, eventHandler);

return () => ref.off(eventType, eventHandler);

};Code language: JavaScript (javascript)The child_added event, according to the Firebase specification, triggers on each existing item and then on each new item. But it’s important for us to control data loading and organize a

subscription strictly for creating new objects, so we were forced to use a peculiar construction:

const unsubscribe = subscribeToFirebaseEvent(

firebaseRef.limitToLast(1),

"child_added",

skipFirst(handler)

);Code language: JavaScript (javascript)We limit the reference to the last created child and subscribe to this reference. Based on the specification, the child_added event triggers on the last existing item and then on newly added items, so we ignore the first handler call. Using this, we created NewsSubscription .

@Injectable()

export class NewsSubscription implements SubscriptionService {

private readonly logger = new Logger(ReviewSubscription.name);

constructor(private readonly newsService: NewsService) {}

subscribe(): Unsubscribe {

return this.subscribeToNewsAdded();

}

private subscribeToNewsAdded() {

return subscribeToFirebaseEvent(

this.newsService.newsRef.limitToLast(1),

"child_added",

skipFirst(this.childAddedHandler)

);

}

private childAddedHandler = (snapshot: DataSnapshot) => {

try {

if (!snapshot.key) {

return;

}

this.newsService.emitNewsAdded({

id: snapshot.key,

...snapshot.val(),

});

} catch (error) {

this.logger.error(error);

}

};

}Code language: JavaScript (javascript)Next, we added logic for news emitting in the service.

@Injectable()

export class NewsService {

readonly newsRef: Reference;

constructor(

@Inject(PUBSUB_PROVIDER) private readonly pubSub: PubSub,

firebaseService: FirebaseService

) {

const database = firebaseService.getApp().database();

this.newsRef = database.ref(FirebaseDatabasePath.NEWS);

}

public emitNewsAdded(news: News): void {

this.pubSub.publish("newsAdded", {

newsAdded: news,

});

}

}Code language: JavaScript (javascript)Created a subscription in the resolver.

@Resolver()

@UseGuards(AuthGuard)

export class NewsResolver {

constructor(

@Inject(PUBSUB_PROVIDER) private readonly pubSub: PubSub,

private readonly newsService: NewsService

) {}

@Subscription(() => NewsObject)

newsAdded() {

return this.pubSub.asyncIterator("newsAdded");

}

}Code language: JavaScript (javascript)And connected subscription, service and resolver to the module.

@Module({

imports: [

FirebaseModule,

GraphQlPubsubModule,

SubscriptionManagerModule.forFeature({

imports: [NewsModule],

subscriptions: [NewsSubscription],

}),

],

providers: [NewsService, NewsResolver],

exports: [NewsService],

})

export class NewsModule {}Code language: JavaScript (javascript)After that, we proceeded to migrate to the front end and created a GraphQL-Saga helper.

import { FetchResult, gql } from "@apollo/client";

import { take } from "redux-saga/effects";

import { SUBSCRIPTION } from "./subscription";

import { apolloSubscription } from "modules/ApolloClient/utils/saga";

import { News } from "types";

type Data = {

newsAdded: News;

};

export const SUBSCRIPTION = gql`

subscription NewsAdded {

newsAdded {

id

content

}

}

`;

export function* subscribeToNewsAddedSaga(callback: (news: News) => Generator) {

const channel = yield* apolloSubscription<Data>({

query: SUBSCRIPTION,

});

try {

while (true) {

const { data }: FetchResult<Data> = yield take(channel);

if (data) {

yield* callback(data.newsAdded);

}

}

} finally {

channel.close();

}

}Code language: JavaScript (javascript)We use this helper to replace the previous Firebase interaction logic.

funciton* subscribeToNewsAdded() {

yield takeEvery(’USER_LOGGED_IN’, function* () {

yield* subscribeToNewsAddedSaga(function* (news) => {

yield put({

type: 'NEWS_ADDED',

news,

})

})

})

}Code language: JavaScript (javascript)We migrated the subscription to the event of news adding – and when news is created, every user can see it in their apps. But Firebase technology has its peculiarities: the child_added subscription runs for all existing children and only then remains as a handler for new items. This means that now we’ve broken the app’s functionality: old news is not displayed to the user. Thevalue subscription has the same logic, it triggers once when the subscription is opened and then with each update.

But we fixed this very easily: we implemented the fetchNews logic, which was simply hidden in the child_added subscription.

Copy-paste Cloud Functions

Next, we started migrating logic from Cloud Functions, which was easier than migrating to the mobile app. This is because functions operate in the same environment as the server. The algorithm of actions was simple:

- Define what a specific function does

- Transfer the logic to the appropriate place on the server

- Delete the function

Let’s consider an example with a function related to granting administrator rights. When a change occurs in the adminUsers table, this function updates the isAdmin field for the user:

import * as functions from "firebase-functions";

import * as admin from "firebase-admin";

export const setAdminStatus = functions.database

.ref("/adminUsers/{pushId}")

.onWrite(async (snap, context) => {

const database = admin.app().database();

const isAdmin = snap.val();

const userId = context.params.pushId as string;

await database.ref("users").child(userId).child("isAdmin").set(isAdmin);

});Code language: JavaScript (javascript)The adminUsers branch represents a Set structure, where it can quickly determine whether a user is an admin or not. The setAdminStatus Firebase function subscribes to updates in the userId branch in adminUsers, indicating granting or revoking administrator rights from the user with this ID.

To replicate the logic of this function, we don’t need to create a subscription on the server because all changes in the database are initiated from the server. This means we need to transfer the logic from the setAdminStatus Firebase Function to the location of the change it’s subscribed to.

On the server, the change in user rights management occurs in theeditUserAdminStatus method of UserService:

export class UserService {

async editUserAdminStatus(args: UserAdminStatusEditInput): Promise<void> {

const { id, isAdmin } = args;

await this.database

.ref("adminUsers")

.child(id)

.set(isAdmin || null);

}

}Code language: JavaScript (javascript)As you can see, this function changes the value of the userId branch in adminUsers. We add an update to the user table at the end to synchronize the role change with the user.

export class UserService {

async editUserAdminStatus(args: UserAdminStatusEditInput): Promise<void> {

const { id, isAdmin } = args;

await this.database

.ref("adminUsers")

.child(id)

.set(isAdmin || null);

await this.database.ref("users").child(id).update({ isAdmin });

}

}Code language: JavaScript (javascript)After this, we can delete the setAdminStatus Cloud Function.

Unexpected obstacles

Corrupted database

We began migrating all requests to the Firebase Realtime Database from the client to the server. At this point, we encountered the main problem of this database: entire type control lies on the developer’s conscience. If in SQL, it’s impossible to insert or update a column that doesn’t exist

or which type doesn’t match the expected one, then in the Realtime Database, types are not as important. This problem led to many errors, both on the server and the client side.

Let’s list the main reasons that caused errors:

- The database contained data of the wrong type;

- The client tried to send data of an inappropriate type to the

server; - The client sent unnecessary fields not specified in the GraphQL

schema; - Adapted GraphQL entity types didn’t match client types;

nulloverwrote existing data;undefinedwas not supported by Firebase.

Errors caused by unnecessary fields in the input could be disabled using the GraphQL config, but we decided to implement a strict approach to working with data, which was supposed to fix all of the listed problems. We investigated which data was corrupted and created migrations to fix it.

One of the capabilities of GraphQL is type checking. This allowed us to protect data from further corruption.

But the most important solution that was supposed to clean up the database and eliminate these errors was creating a strictly typed adapter.

Conditions for such an adapter:

- The use of the spread operator is prohibited: to avoid unnecessary fields remaining in the entity.

- All fields must be enumerated: to ensure that existing optional fields are not lost or deleted from the database. To ensure that, we created the

TypeSafeRequiredtype, which makes optional fields mandatory.

export type OptionalKeys<T> = {

[K in keyof T]-?: {} extends Pick<T, K> ? K : never;

}[keyof T];

export type TypeSafeRequired<T> = Omit<T, OptionalKeys<T>> & {

[Key in OptionalKeys<T>]: T[Key] | undefined;

};Code language: JavaScript (javascript)- All fields of type structure must be extracted into a separate adapter: to follow the single responsibility principle and keep track of the necessary fields at the structure level.

- The adapter must remove fields with

undefinedvalues under the hood: to interact with the database without the need to trackundefined.

export interface BaseFirebaseAdapter<

FirebaseType extends Record<string, unknown>,

GraphQLType extends Record<string, unknown>

> {

toFirebase(graphql: GraphQLType): FirebaseType;

toGraphQL(firebase: FirebaseType): GraphQLType;

}

export class FirebaseAdapter<

FirebaseType extends Record<string, unknown>,

GraphQLType extends Record<string, unknown>

> implements

BaseFirebaseAdapter<

TypeSafeRequired<FirebaseType>,

TypeSafeRequired<GraphQLType>

>

{

constructor(

private readonly adapter: BaseFirebaseAdapter<

TypeSafeRequired<FirebaseType>,

TypeSafeRequired<GraphQLType>

>

) {}

toFirebase = (graphql: GraphQLType): TypeSafeRequired<FirebaseType> => {

return omitUndefined(this.adapter.toFirebase(graphql));

};

toGraphQL = (firebase: FirebaseType): TypeSafeRequired<GraphQLType> => {

return this.adapter.toGraphQL(firebase);

};

}Code language: PHP (php)Often where an adapter is needed, the class it relates to is also used. So we concluded that it’s convenient to keep the adapter alongside the class and use it with the bridge pattern. Here’s an example of creating an adapter:

@ObjectType()

export class UserObject {

@Field(() => String)

id: string;

@Field(() => String)

name: string;

@Field(() => String)

email: string;

static adapter: FirebaseAdapter<User, UserObject>;

}

UserObject.adapter = new FirebaseAdapter({

toFirebase: (graphql) => ({

id: graphql.id,

name: graphql.name,

email: graphql.email,

}),

toGraphQL: (firebase) => ({

id: firebase.id,

name: firebase.name,

email: firebase.email,

}),

});Code language: JavaScript (javascript)Therefore, wherever we interacted with Firebase, we added the usage

of adapters:

@Injectable()

export class UserService {

constructor(private readonly firebaseService: FirebaseService) {}

async getUserById(id: string): Promise<UserObject | null> {

const userSnapshot = await this.firebaseService

.getApp()

.database()

.ref(’users’)

.child(id)

.once('value')

const candidate = userSnapshot.val()

return candidate && UserObject.adapter.toGraphQL({

id, ...candidate

})

}

}Code language: JavaScript (javascript)After implementing the adapters, development slowed down a bit because additional adapters had to be created for each entity. However, the errors listed at the beginning of this section were resolved, and we no longer encountered them. The data flow became more controlled, and

there was confidence that all interactions with the database would work as expected.

For example, UpdateUserInput could overwrite data. But we specified in the adapter for it to simply ignore null.

type FirebaseUpdateUserInput = Partial<Pick<User, "name" | "email">>;

@InputType()

export class UpdateUserInput {

@Field(() => String, { nullable: true })

name?: string | null;

@Field(() => String, { nullable: true })

email?: string | null;

static adapter: FirebaseAdapter<UpdateUserInput, FirebaseUpdateUserInput>;

}

UpdateUserInput.adapter = new FirebaseAdapter({

toFirebase: (graphql) => ({

name: graphql.name ?? undefined,

email: graphql.email ?? undefined,

}),

toGraphQL: (firebase) => ({

name: firebase.name,

email: firebase.email,

}),

});Code language: JavaScript (javascript)And we used it in the necessary method of UserService.

@Injectable()

export class UserService {

async updateUser(id: string, input: UpdateUserInput): Promise<UserObject> {

const firebaseInput = UpdateUserInput.adapter.toFirebase(input)

await this.firebaseService

.getApp()

.database()

.ref(’users’)

.child(id)

.update(firebaseInput)

return this.getUser()

}

}Code language: JavaScript (javascript)Forced optimization

In the beginning, the migration plan looked promising. We were supposed to replace database queries with server requests. Everything was going according to plan. But closer to the middle of the proxy-server migration stage, the number of complaints about the slow performance of applications increased rapidly.

Firebase exchanges data via WebSocket, which is faster than HTTP requests. We understood that the proxy solution would slow down the application. However, we thought that against the backdrop of existing performance issues, this delay would not be noticeable to users.

This affected our plans. We decided to combine the transition to the proxy server with query optimization. First, we abandoned sequential requests one by one, replacing them with batched paginated requests. Second, we added chat filtering to avoid loading unnecessary chats into

the application.

export class ChatService {

async getAllChats(): Promise<ChatObject[]> {

const allChatsSnapshot = await this.firebaseService

.getApp()

.database()

.ref(FirebaseDatabasePath.Chat)

.get();

const allChatsRecord: Record<string, Chat> = allChatsSnapshot.val() || {};

return Object.entries(allChatsRecord).map(([id, chat]) =>

ChatObject.adapter.toGraphQL({ id, ...chat })

);

}

async getChatList(

args: ChatListArgs,

identity: Identity

): Promise<ChatListObject> {

const { offset, limit, filter, order } = args;

const { id } = identity;

let nodes = await this.getAllChats();

nodes = this.filterService.filter(nodes, { ...filter, userId: id });

nodes = this.sorterService.sort(nodes, order);

const total = nodes.length;

return paginate({

limit,

offset,

nodes,

total,

});

}

}Code language: JavaScript (javascript)The getAllChat method fetches all chats from the database. Then the filtering service filters the chats based on the parameters passed in the filter config, the sorting service sorts them according to the order config, and the paginate helper divides the data into pages using limit and offset and forms a standard response type:

type Paginated<Node> = {

total: number;

hasNextPage: boolean;

nodes: Node[];

};Code language: HTML, XML (xml)On the client side, we created a saga that fetches all chats:

import { put, select } from "redux-saga/effects";

import { SortOrder } from "modules/Common/constants";

import { Pagination } from "modules/Common/interfaces";

import { selectChatListPagination } from "../../selectors";

import {

ChatListLoaded,

setChatListError,

setChatListFetchingEnded,

setChatListFetchingStarted,

} from "../../actions";

import { getChatListSaga } from "../../graphql";

import { ChatOrderColumn, ChatStatus } from "../../constants";

export function* fetchChatListWorker() {

try {

const { limit, offset }: Pagination = yield select(

selectChatListPagination

);

yield put(setChatListFetchingStarted());

const { chatListData, chatListError } = yield* getChatListSaga({

limit,

offset,

filter: {

status: ChatStatus.ACTIVE,

},

ordersBy: [

{

column: ChatOrderColumn.LAST_MESSAGE_SENT_AT,

order: SortOrder.DESC,

},

{

column: ChatOrderColumn.CREATED_AT,

order: SortOrder.DESC,

},

],

});

if (chatListData) {

yield put(chatListLoaded(chatListData));

}

if (chatListError) {

yield put(setChatListError(chatListError));

}

} finally {

yield put(setChatListFetchingEnded());

}

}Code language: JavaScript (javascript)We created a saga responsible for managing concurrent requests to load the next page of news. This saga waits for the completion of the previous loading and a new “load more” event and then calls fetchMoreChatListWorker:

import { call, take } from "redux-saga/effects";

import { loadMoreChatList, setChatListFetchingEnded } from "../../actions";

import { fetchMoreChatListWorker } from "./workers";

export function* fetchMoreWatcher() {

while (true) {

// Wait until the previous fetching was done

yield take(setChatListFetchingEnded.getType());

// Listen load more action

yield take(loadMoreChatList.getType());

yield call(fetchMoreChatListWorker);

}

}Code language: JavaScript (javascript)fetchMoreChatListWorker increments pagination and fetches the next page if possible:

import { put, select } from "redux-saga/effects";

import { Pagination } from "modules/Common/interfaces";

import { selectChatListPagination } from "../../selectors";

import {

fetchChatList,

incrementChatListPaginationOffset,

} from "../../actions";

export function* fetchMoreChatListWorker() {

const { offset, limit, total }: Pagination = yield select(

selectChatListPagination

);

if (offset + limit >= total) {

return;

}

yield put(incrementChatListPaginationOffset());

yield put(fetchChatList());

}Code language: JavaScript (javascript)Database as a cache

We faced a very heavy server load due to the large amount of data being fetched simultaneously. Additionally, the waiting time during pagination was constant but too long for some tables.

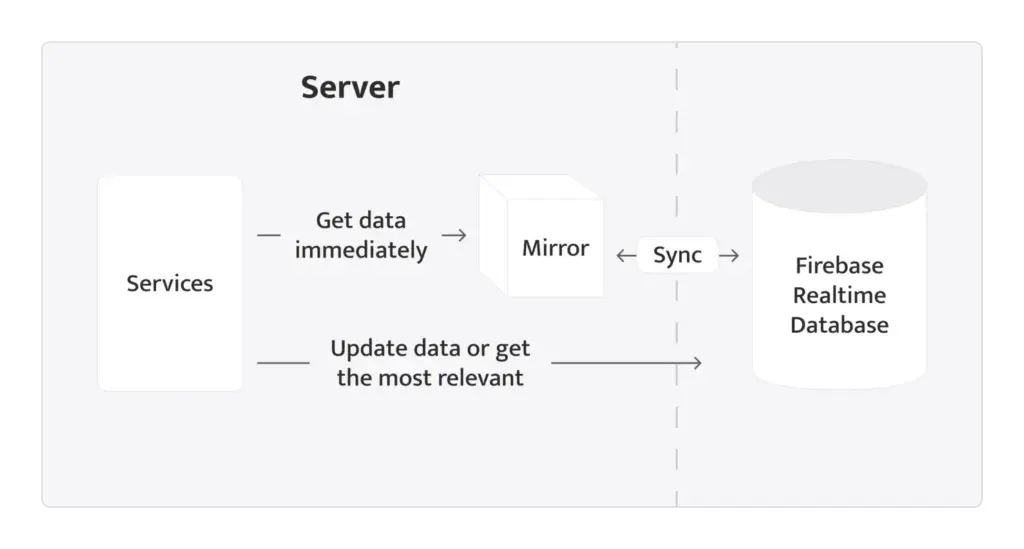

We implemented filtering, sorting, and pagination on the server by loading the entire necessary table onto the server. This allowed us to work with the data very flexibly. However, for each request to the route implementing this functionality, the server sends a request to Firebase to load the latest data. Therefore, parallel requests to the same route created additional load on the server. But is this necessary, considering the data synchronization mechanism that Firebase operates on? It was this thought that led us to create a mirror on the server, allowing us to receive all data instantly.

FirebaseMirror should be able to:

- Prefetch all data related to the Firebase reference

- Subscribe to events:

child_added,child_removed,child_updated - Provide an item by key

- Provide all available items

To implement the first two points, we decided to use SubscriptionManager and extract all dependent logic into the parent class. Thus, we obtained the abstract class FirebaseMirrorService.

import { FirebaseMirrorCoreService } from "./interface";

export abstract class FirebaseMirrorService<

Entity

> extends FirebaseMirrorCoreService<Entity> {

getByKey(key: string): Entity | undefined {

return this.getTableData().get(key);

}

getAll(): Array<[string, Entity]> {

return Array.from(this.getTableData());

}

}Code language: JavaScript (javascript)Let’s consider how we connected the mirror using the example of the news module. We created NewsMirror.

import { Injectable } from "@nestjs/common";

import { News } from "types";

import { FirebaseDatabasePath } from "../firebase/constants";

import { FirebaseService } from "../firebase/service";

import { FirebaseMirrorService } from "../firebase/firebase-mirror/service";

@Injectable()

export class NewsMirror extends FirebaseMirrorService<News> {

constructor(firebaseService: FirebaseService) {

const database = firebaseService.getApp().database();

super(database.ref(FirebaseDatabasePath.NEWS));

}

}Code language: JavaScript (javascript)We connected the mirror to the module using the subscription manager.

@Module({

imports: [

FirebaseModule,

GraphQlPubsubModule,

SubscriptionManagerModule.forFeature({

imports: [FirebaseModule],

subscriptions: [NewsMirror],

}),

],

providers: [NewsService, NewsResolver],

})

export class NewsModule {}Code language: JavaScript (javascript)We used this mirror in the key query that is used in the newsList resolver.

@Injectable()

export class NewsService {

constructor(private readonly newsMirror: NewsMirror) {}

private async getAllNews(): Promise<NewsObject[]> {

return this.newsMirror

.getAll()

.map(([id, news]) => NewsObject.adapter.toGraphQL({ id, ...news }));

}

}Code language: JavaScript (javascript)Now all requests for getAllNews are processed almost instantly, thanks to the use of the mirror. We replaced direct queries with requests to the corresponding mirrors wherever it was applicable. This significantly reduced the server response time, thus greatly

speeding up the application.

However, it turned out that the subscription to child_added did not work as we expected.

Previously, we used the construct:

const unsubscribe = subscribeToFirebaseEvent(

firebaseRef.limitToLast(1),

"child_added",

skipFirst(handler)

);Code language: JavaScript (javascript)It worked because a new entry was added with an ID greater than the previous one, and the reference was limited to the last entry sorted by the table’s ID. Therefore, according to the child_added specification, it triggers on the last existing item and then on each addition of a new item.

However, in our database, there were tables with keys generated not using the Firebase API but using Uuid, which does not guarantee each new ID is greater than the previous ID in the table. This approach no longer worked. So, we relied on Firebase’s guarantee that the value event occurs only after the child_added event is triggered for all existing items.

Having dealt with the issue of Firebase subscriptions and events, we can now demonstrate FirebaseMirrorCoreService:

import {

SubscriptionService,

Unsubscribe,

} from "../../subscription-manager/subscription-manager.types";

import {

FirebaseSnapshot,

FirebaseTable,

FirebaseTableReference,

} from "../firebase.types";

import { subscribeToFirebaseEvent } from "../firebase.utils";

export abstract class FirebaseMirrorCoreService<Entity>

implements SubscriptionService

{

static dataByTableName: Map<string, Map<string, unknown>> = new Map();

protected readonly tableName: string;

constructor(protected readonly reference: FirebaseTableReference<Entity>) {

this.tableName = this.getTableName(reference);

this.initializeTableData();

}

public async subscribe(): Promise<Unsubscribe> {

// * Due to the way firebase child_added works, it emits child_added event for every single node in collection

// * then triggers for every new one

// * await this.reference.once("value") ensures that all the children nodes are loaded so that we can continue with initializing the table

const childAddedUnsubscribe = subscribeToFirebaseEvent(

this.reference,

"child_added",

this.entityAddedOrUpdatedHandler

);

// * once('value') is triggered only after all child_added finished emitting

// * https://firebase.google.com/docs/database/admin/retrieve-data?hl=en#section-event-guarantees

await this.reference.once("value");

const unsubscribes = [

subscribeToFirebaseEvent(

this.reference,

"child_changed",

this.entityAddedOrUpdatedHandler

),

childAddedUnsubscribe,

subscribeToFirebaseEvent(

this.reference,

"child_removed",

this.entityRemovedHandler

),

];

return () => unsubscribes.forEach((unsubscribe) => unsubscribe());

}

protected getTableData(): Map<string, Entity> {

const { dataByTableName } = FirebaseMirrorCoreService;

const { key: tableName } = this.reference;

return dataByTableName.get(tableName || "") as Map<string, Entity>;

}

private entityAddedOrUpdatedHandler = (

snapshot: FirebaseSnapshot<Entity>

): void => {

const { key } = snapshot;

const candidate = snapshot.val();

if (key && candidate) {

this.getTableData().set(key, candidate);

}

};

private entityRemovedHandler = (snapshot: FirebaseSnapshot<Entity>): void => {

const { key } = snapshot;

const candidate = snapshot.val();

if (key && candidate) {

this.getTableData().delete(key);

}

};

private getTableName(reference: FirebaseTableReference<Entity>): string {

const { key: tableName } = reference;

if (!tableName) {

throw new Error("Table name is not defined");

}

return tableName;

}

private initializeTableData(): void {

const { dataByTableName } = FirebaseMirrorCoreService;

if (!dataByTableName.has(this.tableName)) {

dataByTableName.set(this.tableName, new Map());

}

}

}Code language: JavaScript (javascript)Migration to PostgreSQL

By this stage, we had already sped up the application by ~80 times. We achieved this by adding server-side filtering and pagination and changing the data retrieval logic. But this solution has a drawback: it puts a heavy load on the server.

We had to increase the memory limits of the server from 512MB to 8 GB. However, this number may be higher or lower for you depending on the stage when you decided to undertake this migration and how much data is stored in Firebase. Therefore, the next step of replacing the NoSQL database with SQL was no less important, although it did not have as significant an impact on the speed of the application.

Database design

We planned the structure of the database in DBML. This requires a thorough understanding of the application logic, identifying the purpose of each field in the current database, and delineating relationships and dependencies between entities. This is a complex task and should only be entrusted to experienced professionals.

Delving into database design is a topic for a separate article. However, we will share some things we encountered during this process.

DBML does not have the ability to split code into files. It is convenient to work with during the application architecture planning stage rather than describing all existing models. Problems arise when there are more than 20 entities and tables.

We had 43 tables and 15 auxiliary enums describing statuses and types of entities. Therefore, we decided to separate tables by files and merge them into the final output.dbml.

Working with DBML in VS Code using the DBML Live Preview extension was convenient for seeing the intermediate result – a separate table – and the resulting schema.

DBML syntax applies the following limitations to fit the multi-file approach:

- References to other tables in a separate table file should be hidden to avoid errors. Therefore, we agreed that

//@Refwould be converted toRefin the resulting file. This allowed us to describe relationships in comments without breaking the preview of a

separate table. - References should not be repeated. Therefore, we decided to leave references only under the table containing the foreign key.

- All enums must be declared before use. Therefore, we defined that files ending with

.enum.dbmlare for enums, and these files would be written to the resulting schema first.

Designing the database, we tried to improve structures, standardize, and correct field names and types. The number of tables was significant, so changes were made with the condition that a comment describing the nature of the changes was added to fields/tables.

Enum Scope {

ADMIN

USER

}Enum UserStatus {

ACTIVE

DELETED

BLOCKED

}Table user {

id varchar [primary key, note: 'Firebsae Auth UUID']

created_at timestamp [not null]

updated_at timestamp [null]

status UserStatus [not null]

selected_language_id integer [null, note: 'language should be removed and replaced by this value']

is_online boolean [not null, note: 'An appropriate field is isActive']

scope Scope [not null, note: 'isAdmin should be replaced by this value']

}

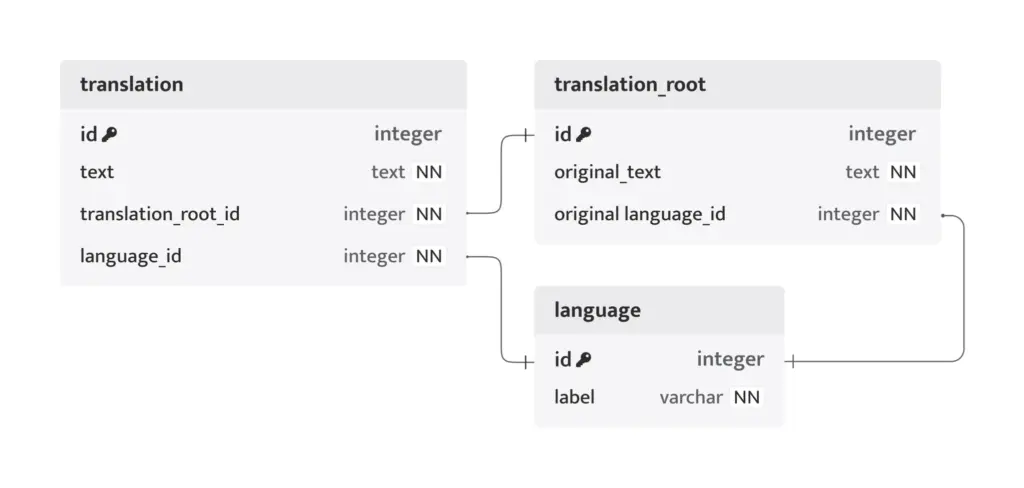

//@Ref: user.selected_language_id > language.idCode language: JavaScript (javascript)A separate case worth considering is language support.

Usually, language support in applications is implemented using JSON files structured with a key-value format. The typical command for this setup would involve getting translations from JSON files corresponding to languages such as English (en), Finnish (fi), etc., based on a specified key for the user’s chosen language.

However, this approach may not suffice for applications requiring dynamic and adaptable translations, which administrators can modify via an admin dashboard, which is the case we had. There were dynamic fields whose text could change (for example, news content) in the application. For supporting various languages, we used a field of type Label:

type Label = {

fi: string;

en: string;

};Consequently, we opted for an unconventional solution, leveraging localization support through a database. We came up with a structure of three tables:

- The

languagetable defines the languages supported by the application. - The

translation_roottable represents the initial entity of the text to be translated. - The

translationtable is responsible for translations into other languages.

Swapping Realtime Database for PostgreSQL

After completing the design phase, we set up TypeORM and created TypeORM entities:

import { Column, Entity, OneToMany, OneToOne, Relation } from "typeorm";

import { Scope } from "../../auth/constants";

import { CommonEntity } from "../../common/entities/language";

import { LanguageEntity } from "../../language/entities/language";

import { UserStatus } from "./constants";

@Entity("user")

export class UserEntity extends CommonEntity {

@Column({ type: "int4", default: UserStatus.ACTIVE })

status: UserStatus;

@Column({ type: "int4", name: "selected_language_id", nullable: true })

public selectedLanguageId: Maybe<number>;

@Column({ type: "bool", name: "is_online", default: true })

public isOnline: boolean;

@Column({ type: "varchar", default: Scope.USER })

public scope: Scope;

@ManyToOne(() => LanguageEntity, (language) => language.users)

@JoinColumn({ name: "selected_language_id" })

selectedLanguage: LanguageEntity;

}Code language: JavaScript (javascript)Then we began replacing all references from Firebase Realtime DB to PostgreSQL. Let’s take changing user permissions as an example, which we addressed during the Firebase Functions migration:

export class UserService {

async editUserAdminStatus(args: UserAdminStatusEditInput): Promise<void> {

const { id, isAdmin } = args;

const scope = isAdmin ? Scope.SUPER_ADMIN : Scope.USER;

await UserEntity.update({ id }, { scope });

}

}Code language: JavaScript (javascript)Next, the biggest task was migrating data to PostgreSQL. We had to consider type compatibility, the order of table migrations, and potential errors. Therefore, we created small services responsible for populating several tables:

@Injectable()

class UserMigrationService {

async migrate(): Promise<void> {

const users = this.userService.firebaseGetAllUsers();

const preparedUsers = await Promise.all(

users.map((user) => this.prepareUser(user))

);

await UserEntity.insert(preparedUsers);

}

private async prepareUser(user: UserObject): Promise<UserEntity> {

return {

id: user.id,

scope: user.isAdmin ? Scope.SUPER_ADMIN : Scope.USER,

selectedLanguageId: await this.getSelectedLanguageId(user),

status: await this.getUserStatus(user),

createdAt: user.createdAt,

updatedAt: user.updatedAt,

isOnline: user.isActive || false,

};

}

private async getSelectedLanguageId(

user: UserObject

): Promise<Maybe<number>> {

if (!user.selectedLanguage) {

return null;

}

const language = await LanguageEntity.findOneBy({

label: user.selectedLanguage,

});

return language?.id;

}

private async getUserStatus(user: UserObject): Promise<UserStatus> {

const isBlocked = await this.userService.firebaseIsUserBlocked(user.id);

if (isBlocked) {

return UserStatus.BLOCKED;

}

if (user.deletedAt) {

return UserStatus.DELETED;

}

return UserStatus.ACTIVE;

}

}Code language: JavaScript (javascript)We created a resulting migration service that determined the execution order of these services:

import { Injectable, Logger } from "@nestjs/common";

import {

UserMigrationService,

LanguageMigrationService,

// ...

} from "./migrations";

@Injectable()

export class FirebaseMigrationService {

private logger = new Logger(FirebaseMigrationService.name);

constructor(

private userMigrationService: UserMigrationService,

private languageMigrationService: LanguageMigrationService // ...

) {}

async migrate(): Promise<void> {

try {

await this.languageMigrationService.migrate();

await this.userMigrationService.migrate();

// ...

this.logger.log("Migration has successfully done");

} catch (error) {

this.logger.error(error);

}

}

}Code language: JavaScript (javascript)We set up a separate service to execute the migration to avoid burdening the main server.

import { NestFactory } from "@nestjs/core";

import { AppModule } from "./app.module";

import { FirebaseMigrationService } from "./modules/firebase-migration/service";

const bootstrap = async (): Promise<void> => {

const app = await NestFactory.create(AppModule);

const firebaseMigrationService = app.get(FirebaseMigrationService);

await firebaseMigrationService.migrate();

};

void bootstrap();Code language: JavaScript (javascript)We ran the migration several times to ensure that we handled everything and to identify any corrupted data in the database.

For example, legacy logic set the profile name to a null when a user profile was deleted. We specified that firstName is mandatory in the GraphQL schema, so null was causing an error when filling the table. Therefore, an additional check was added during the migration:

const firstName = user.firstName || "";Code language: JavaScript (javascript)Data duplication is often encountered when working with NoSQL, so we faced some non-obvious cases. We tackled a lot of the issues related to the inability to adapt all logic to the new structure. For instance, there was previously an adminUsers branch that served as a source of information about admins, and the isAdmin field in the users branch was decorative for convenience. Now there is no equivalent to adminUsers, only the scope column of the user table can determine whether a user is an admin or not. And this is just one example; there were quite a lot of such changes in 43 tables.

After successfully running the migration several times without errors, we scheduled the migration time. To do this, we analyzed user activity, displayed a banner in the application for those who might accidentally access the app during maintenance time, and disabled all functionality.

When time X arrived, we deployed two services: one for running the migration and the other to redirect traffic (the main server). The migration lasted for four hours; all data remained safe and secure. Following the release, a couple of minor errors occurred, but we quickly addressed and resolved them.

Conclusions

Designing and selecting a database, defining data interaction pathways, and choosing technologies lay the foundation for a reliable application that allows your business to expand. However, if the initial choice was incorrect, it does not mean that your working application is

doomed to drown in the data stream. We were able to migrate outdated solutions to new technologies, transitioning from Firebase Realtime DB to PostgreSQL and from serverless to client-server architecture.

During technology migration, we did not halt the application and continued working on bugs and new features, which is crucial for business. At every step, we considered user feedback and adjusted our plans accordingly. And now we share our experience so that you understand the pitfalls that await you.